Chapter 11 Regression as a statistical modelling

One way anova is based on an underlying statistical model. Statistical modelling can allow you to extract more information from your data than some simpler “test” based methods that you may have learned previously. Most contemporary papers in ecology use models of some kind. Even if the nature of the data you collect limits your options, it is very important to learn to fit and interpret statistical models in order to follow the literature.

11.0.0.1 What is a statistical model?

To some extent statistical modelling is easier than other forms of ecological modelling. Building process based models requires a great deal of understanding of a system. Statistical models are built from the data themselves. Providing you do have some data to work with you can let the combination of data and prebuilt algorithms find a model. However there are many issues that make statistical modelling challenging.

A statistical model is a formal mathematical representation of the “sample space”“, in other words the population from which measurements could have been drawn. It has two components.

An underlying “deterministic”" component, that usually represents a process of interest

A stochastic component representing “unexplained” variability So, a statistical model effectively partitions variability into two parts. One part represents some form of potentially interesting relationship between variables. The other part is just “random noise”. Because statistics is all about variability, the “noise” component is actually very important. The variability must be looked at in detail on order to decide on the right model. Many of the challenges involved in choosing between statistical models involves finding a way to “explain” the “unexplained” variability. This fundamental contradiction is why statistical concepts are so hard to understand.

11.0.1 Uses of models

The literature on statistical modelling frequently uses the terms “explanation”" and “prediction”" to describe the way models are used. Although the same model can have both roles, it is worth thinking about the difference between them before fitting and interpreting any models.

11.0.1.1 Prediction

Models can be used to predict the values for some variable when we are given information regarding some other variable upon which it depends. An example is a calibration curve used in chemistry. We know that conductivity and salinity are directly related. So if we measure the conductivity of liquids with known salinities we can fit a line. We can then use the resulting model to predict salinity at any point between the two extremes that we used when finding the calibration curve. Notice that we cannot easily extrapolate beyond the range of data we have. We will see how the same concept applies to the models we use in quantiative ecology.

Predictions are more reliable if a large portion of the variability in the data can be “explained” through relationships with other variables. The more random variability in the data, the more difficult prediction becomes.

11.0.1.2 Explanation

The idea that the variability in the data can be “explained” by some variable comes from the terminology that was used when interpretating experimental data. Experiments usually involve a manipulation and a control of some description. If values for some response differ between control and intervention then it is reasonable to assume that the difference is “explained” by the intervention. If you wish to obtain data that are simple to analyse and interpret you should always design an experiment. However, experiments can be costly. Ecological systems are often slow to respond to interventions, making experiments impossible within the time scale of a master’s dissertation. We are often interested in systems that cannot be easily modified anyway on ethical or practical grounds. Thus in ecology we often have to interpret associations between variables as evidence of process that “explain” a relationship. Correlation is not causation. Although patterns of association can provide insight into causality they are not enough to establish it. So, when you read the words “variance explained” look carefully at the sort of data that are being analysed. In an ecological setting this may only suggest close association between variables, not a true explanation in the everyday sense of the word.

When there is a great deal of variability in the data we may want to ask whether any of the variability at all is “explained” by other variables. This is where statistical tests and p-values have a purpose. When faced with small amounts of noisy data it can be useful simply to ask whether more variability is associated with some variable than would occur by chance.

11.1 The general linear model

General linear models lie behind a large number of techniques. These have different names depending on the type of data used to explain or predict the variability in a numerical response variable.

- Regression (Numerical variable)

- Analysis of variance (One or more categorical variables)

- Analysis of covariance (Categorical variable plus numerical variable)

- Multiple regression (Multiple numerical variables)

Although these analyses are given different names and they appear in different parts of the menu in a program such as SPSS, they are all based on a similar mathematical approach to model fitting. In R the same model syntax is used in all cases. The steps needed to build any linear model are…

- Look at the data carefully without fitting any model. Try different ways of plotting the data. Look for patterns in the data that suggest that they are suitable for modelling.

- Fit a model: The standard R syntax for a simple linear regression model is mod<-lm(y~x) However model formulae may be much more complex.

- Look at whether the terms that have been entered in the model are significant: The simplest R syntax is anova(mod). Again, this part of the process can be become much more involved in the case of models with many terms.

- Summarise the model in order to understand the structure of the model. This can be achieved with summary(mod)

- Run diagnostics to check that assumptions are adequately met. This involves a range of techniques including statistical tests and graphical diagnoses. This step is extremely important and must be addressed carefully in order to ensure that the results of the exercise are reliable.

11.2 Regression

11.2.1 Theory

The regression equation is ..

\(y=a+bx+\epsilon\) where \(\epsilon=N(o,\sigma^{2})\)

In other words it is a straight line with a as the intercept, b as the slope, with the assumption of normally distributed errors (variability around the line)

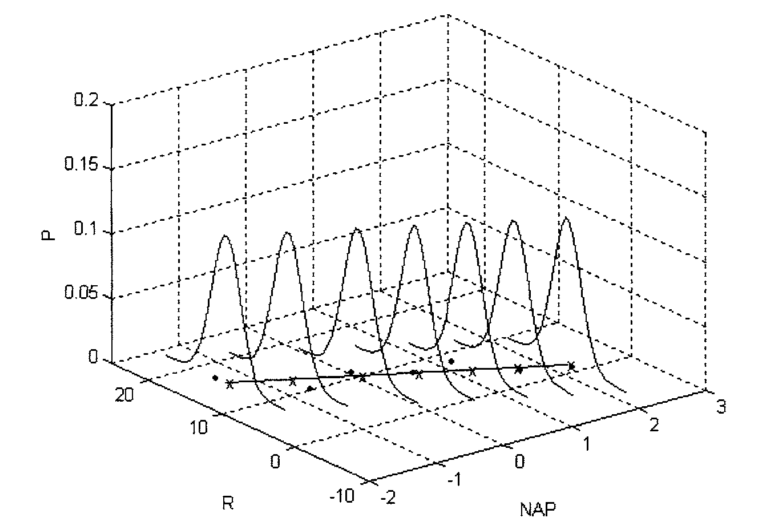

The diagram below taken from Zuur et al (2007) illustrates the basic idea. In theory, if we had an infinite number of observations around each point in a fitted model, the variability would form a perfect normal distribution. The shape and width of the normal curves would be constant along the length of the model. These normal curves form the stochastic part of the model. The strait line is the deterministic part. This represents the relationship we are usually most interested in. The line is defines by its intercept with the axis and its slope.

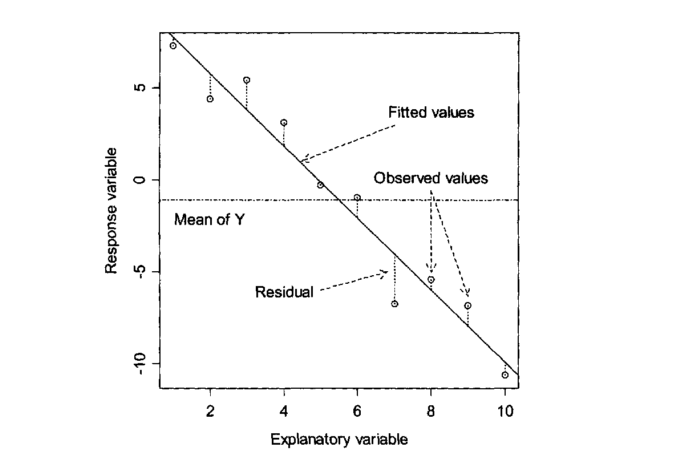

For any observed value of y there will be a fitted value (or predicted value) that falls along the line. The difference between the fitted value and the observed value is known as the residual.

In reality we do not have infinite observed values at each point. However if we collected all the residuals together we should get a normal distribution.

11.2.2 Example

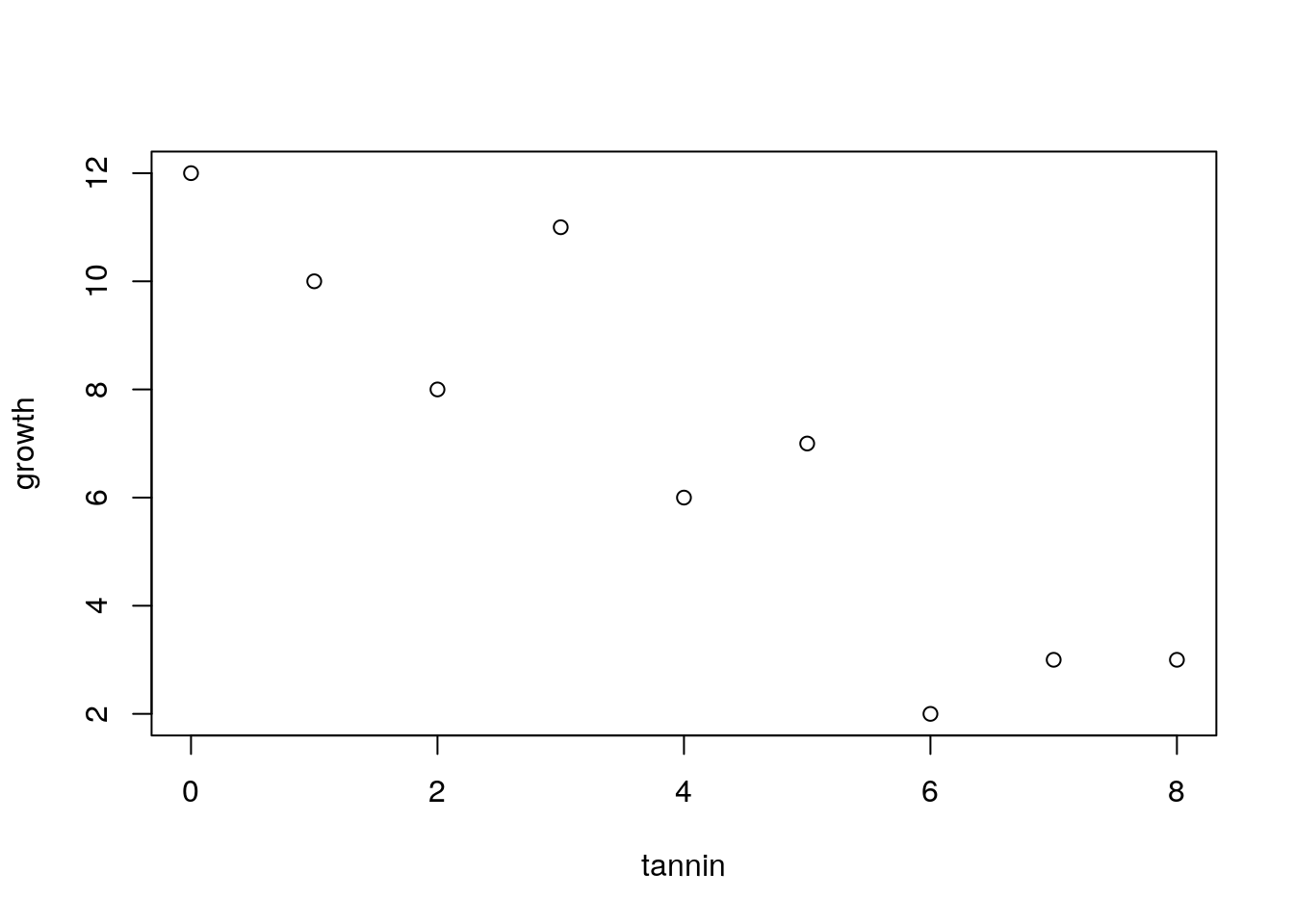

The example is taken from Crawley’s R book. It is very simplified and idealised, but serves to explain the concepts. Crawley’s own ecological research involves herbivory. Here he presents an example in which the growth of insect larvae on leaves with different concentrations of tannin has been measured. Tannin concentrations slow insect growth (Crawley unfortunately does not provide the units in which this is measured). The question is, how much is growth inhibited by an increase of 1% in tannin concentration? The first step after loading the data is to produce a scatterplot.

larvae<-read.csv("/home/msc_scripts/data/larvae.csv")

names(larvae)## [1] "growth" "tannin"attach(larvae)

plot(growth~tannin)

The scatterplot is produced using the syntax that we will use in the model. Growth is a function of (~) tannin.

We now fit a model and assign the results to an object in R. We can call this “mod”. This contains all the information about the fitted model.

mod<-lm(growth~tannin)We can now look at the properties of the model. If we ask for an “anova”. R will produce the following output.

anova(mod)## Analysis of Variance Table

##

## Response: growth

## Df Sum Sq Mean Sq F value Pr(>F)

## tannin 1 88.817 88.817 30.974 0.0008461 ***

## Residuals 7 20.072 2.867

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1This is similar to the table produced for an ANOVA involving categegorical variables (factors). It can be interpreted in a similar way. Just as for an ANOVA we have an F ratio that represents the amount of variability that falls along the regression line divided by the residual variation.

The numerator degrees of freedom for a simple regression is always one. The numerator degrees of freedom is n-2 as we have estimated two parameters, the slope and the intercept. Thus we have found a very significant effect of tannin concentration on growth F(1, 7) =31, p <0.001. You should report the R² values along with the p-value.

A correlation test would have shown the same significant relationship, but without as much detail.

cor.test(growth,tannin)##

## Pearson's product-moment correlation

##

## data: growth and tannin

## t = -5.5654, df = 7, p-value = 0.0008461

## alternative hypothesis: true correlation is not equal to 0

## 95 percent confidence interval:

## -0.9796643 -0.5972422

## sample estimates:

## cor

## -0.9031408The additional information that we have with a regression concerns the two elements in the regression equation. The slope and the intercept.

summary(mod)##

## Call:

## lm(formula = growth ~ tannin)

##

## Residuals:

## Min 1Q Median 3Q Max

## -2.4556 -0.8889 -0.2389 0.9778 2.8944

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 11.7556 1.0408 11.295 9.54e-06 ***

## tannin -1.2167 0.2186 -5.565 0.000846 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 1.693 on 7 degrees of freedom

## Multiple R-squared: 0.8157, Adjusted R-squared: 0.7893

## F-statistic: 30.97 on 1 and 7 DF, p-value: 0.0008461Remember the equation we used.

\(y=a+bx+\epsilon\)

The intercept (a) is 11.76

The slope (b) is -1.22

Which means that growth (whatever this was measured in by Crawley) is reduced by 1.22 for each increase of 1% in tannin concentration.

11.2.3 Confidence intervals

There is an issue with this way of summarising the results. If we only report the coefficients as given we have ignored the fact that there was unexplained variation in the model. This variation produces uncertainty regarding the true values of the parameters. The greater the unexplained variation (scatter or noise) around the line, the less confident we can be regarding their values. The statistical mechanism used in the calculations (that is not particularly complex, but can safely be taken on trust) allows us find confidence intervals for the parameters. If the confidence intervals do not include zero then the parameters are significant. There are only a few cases where this is very meaningful for the intercept. We are usually more interested in the slope.

confint(mod)## 2.5 % 97.5 %

## (Intercept) 9.294457 14.2166544

## tannin -1.733601 -0.6997325So now we can go a bit further. Instead of giving a point estimate for the effect of tannin on growth we can state that the 95% confidence interval for the effect of a 1% increase of tannin on growth lies between -1.73 and -0.7

11.2.4 Prediction

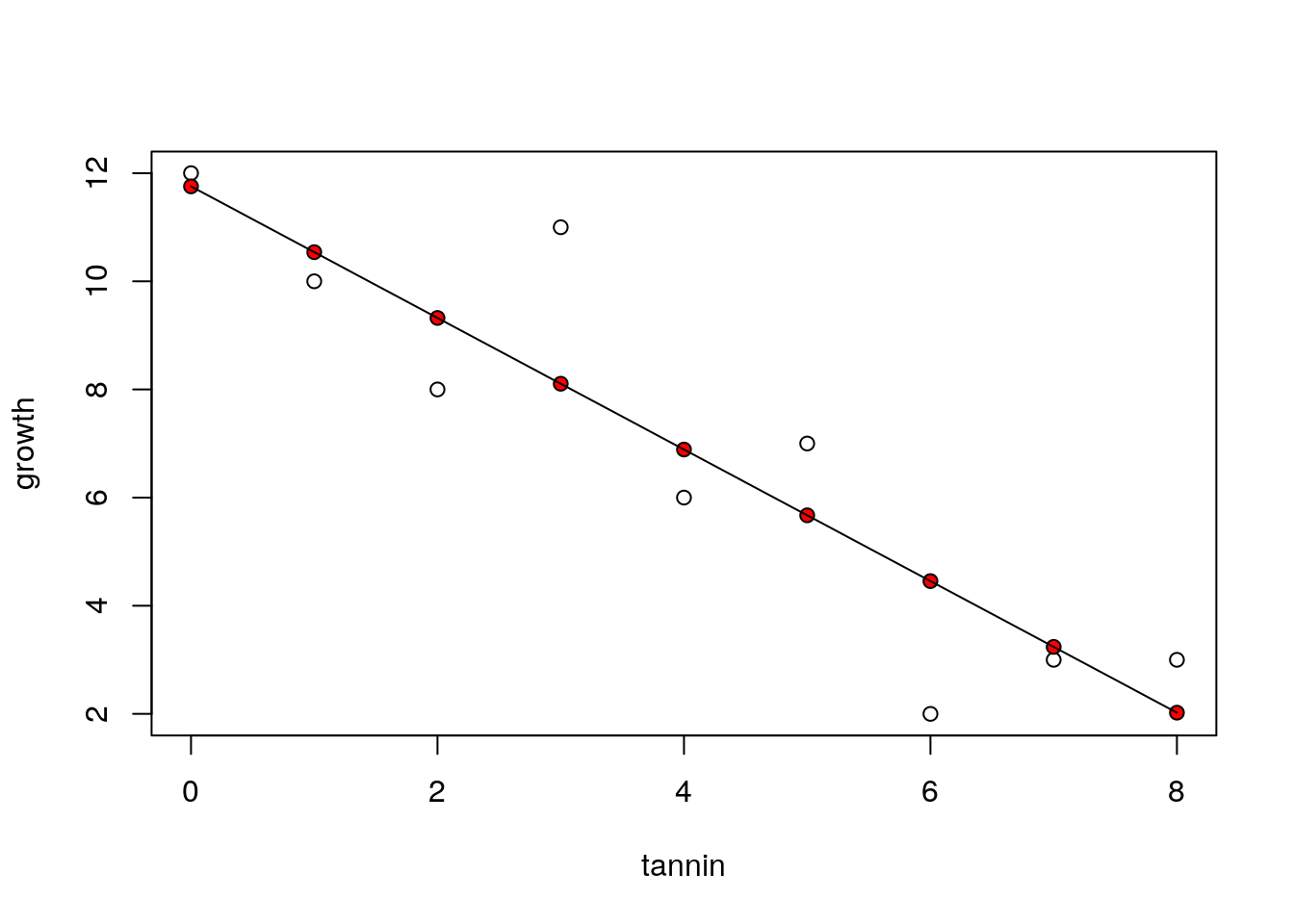

The equation can be used to predict the most likely value of y given a value of x. If we just ask R to “predict” we get the fitted values for the values of the explanatory variable that we used when fitting the model.

predict(mod)## 1 2 3 4 5 6 7

## 11.755556 10.538889 9.322222 8.105556 6.888889 5.672222 4.455556

## 8 9

## 3.238889 2.022222So we can plot out the data again and show the predicted values as red points and draw the regression line.

plot(growth~tannin)

points(tannin,predict(mod),pch=21,bg=2)

lines(tannin,predict(mod))

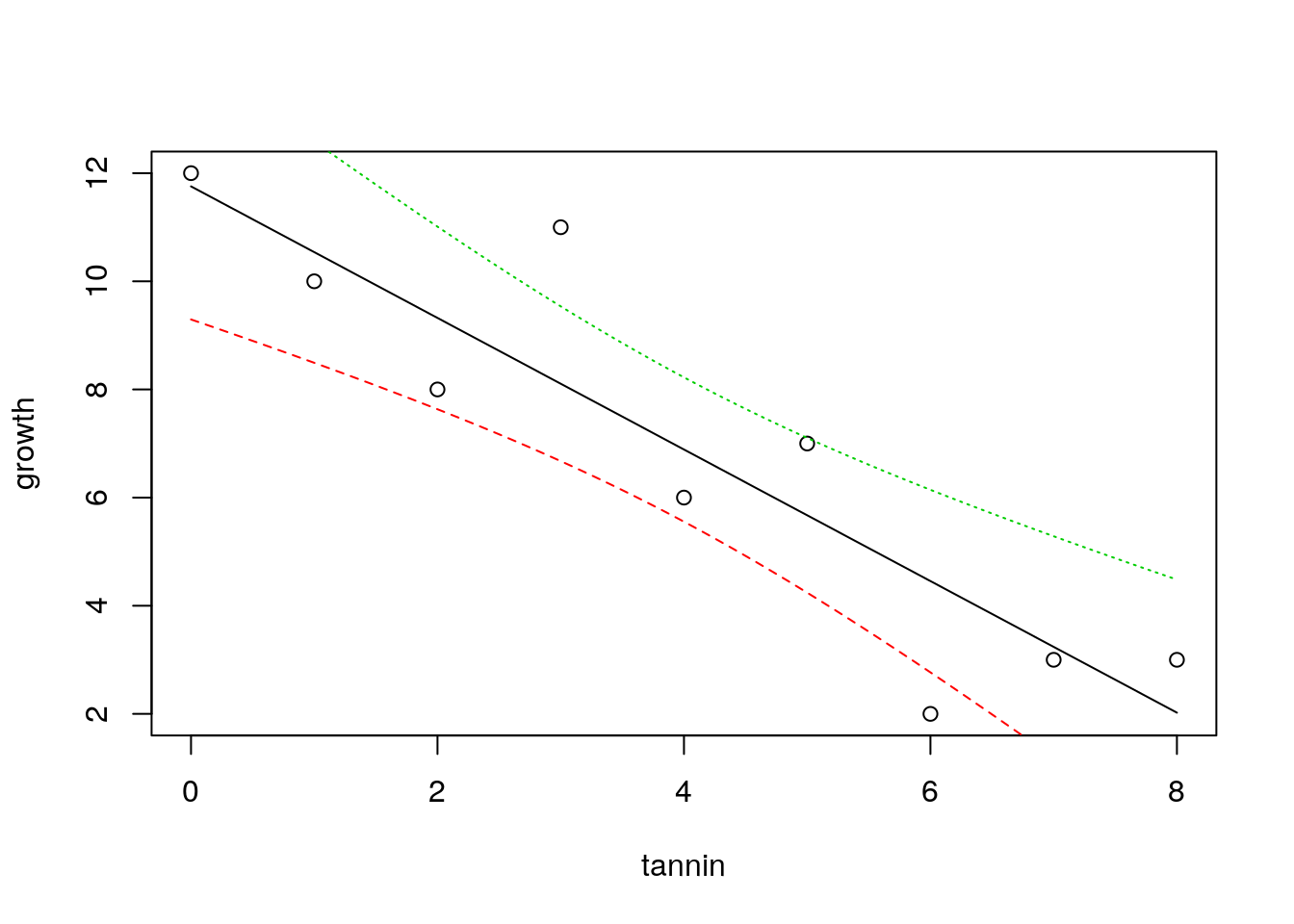

11.2.4.1 Prediction with confidence intervals

As we have seen, a statistical model takes into account uncertainty that arises as a result of variability in the data. So we should not simple look at the line of best fit as summing up a regression. We should add some indication of our confidence in the result to the figure.

To achieve this nicely in R requires a couple of extra steps. After plotting the data with plot(growth~tannin) we set up a sequence of 100 x values that lie between the minimum and the maximum. Now if we pass these to the predict function in R and ask for confidence intervals (that by default are 95%) we get the figure below.

plot(growth~tannin)

x<-seq(min(tannin),max(tannin),length=100)

matlines(x,predict(mod,list(tannin=x),interval="confidence"))

The confidence bands refer to the fitted model. They show uncertainty regarding the regression line and are calculated from the standard error.

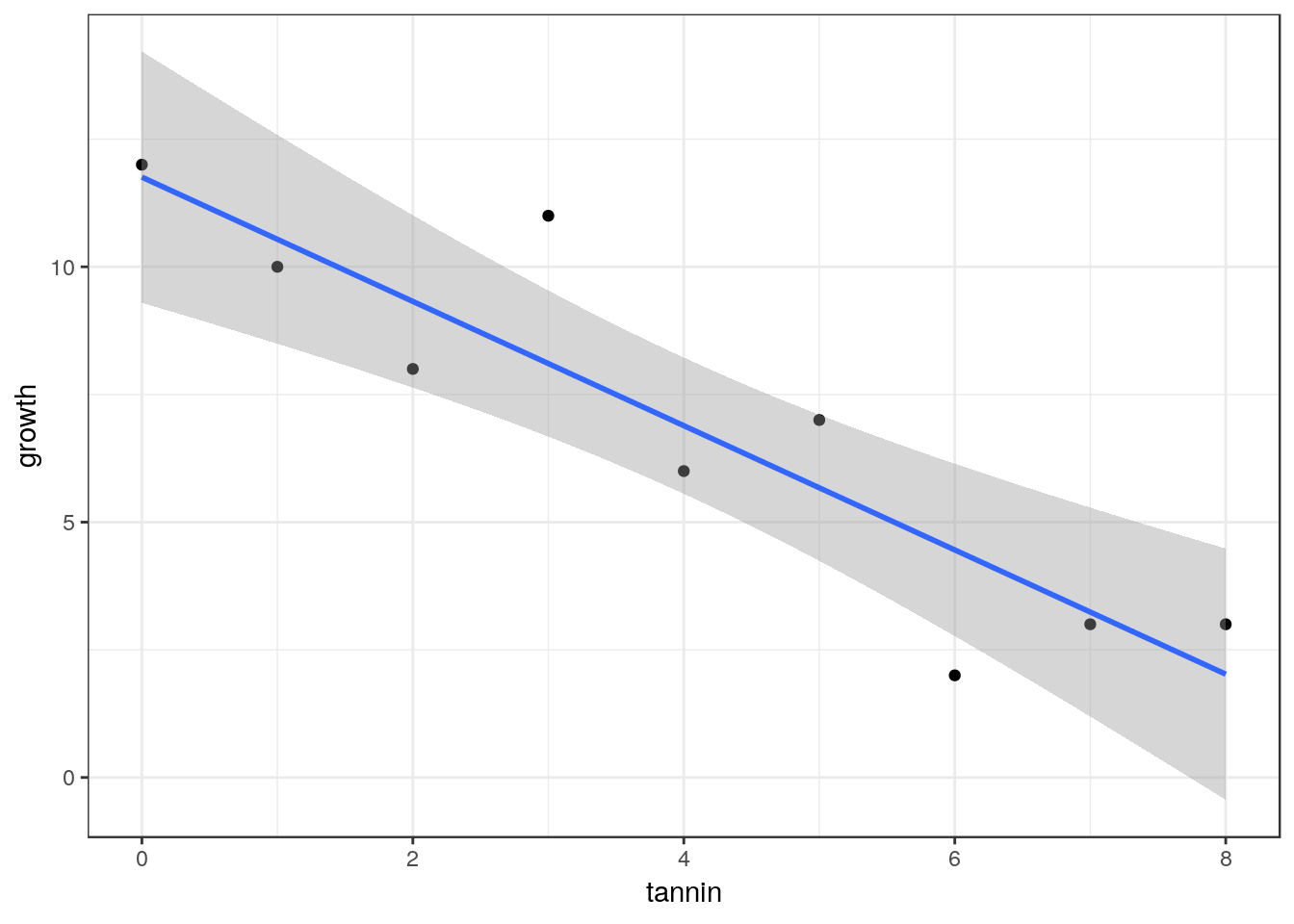

11.3 Using ggplot2 for confidence intervals.

library(ggplot2)

g0<-ggplot(data=larvae, aes(x=tannin,y=growth))

g1<-g0+geom_point()

g1+geom_smooth(method="lm")

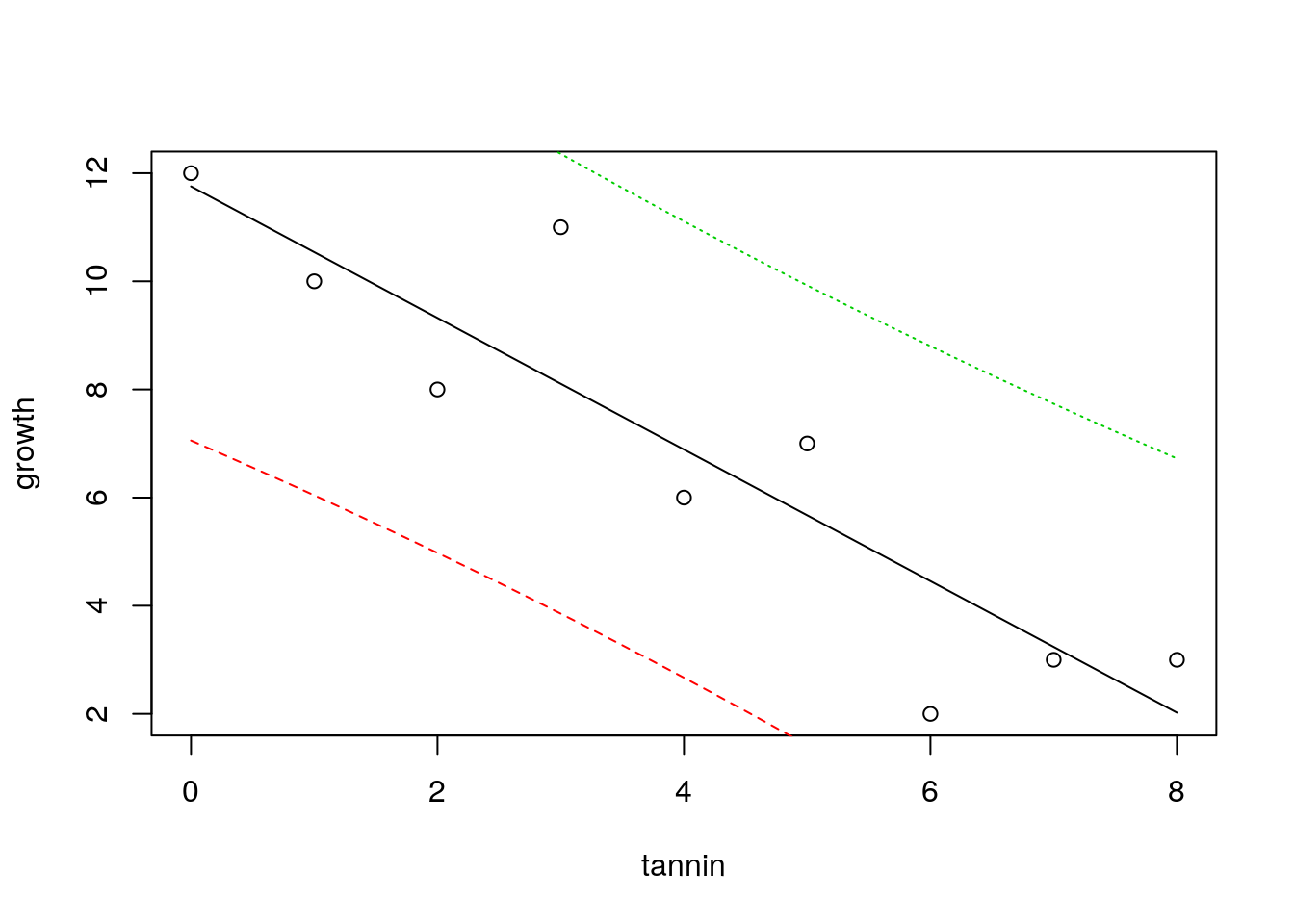

11.3.1 Prediction intervals

There is another way to look at uncertainty. If we know the value for tannin, where would we expect a single measured value for growth to lie. Notice that this is different. The confidence bands show where the mean value migh lie if we measured growth many times. But in this case we want to know where we might expect a data point to fall. This is a much broader interval, and is based on the idea of theoretical normal curves falling around the regression line with a standard deviation estimated from the data. We cut off the tails of these curves.

plot(growth~tannin)

x<-seq(min(tannin),max(tannin),length=1000)

matlines(x,predict(mod,list(tannin=x),interval="prediction"))

11.3.2 Diagnostics

It is not enough to simply fit a regression line, or any other model. We have to justify our choice of model and convince those who might use it that the assumptions have been adequately met. The question of how close the data are to meeting the assumptions requires model diagnostics.

The basic assumptions for regression

- Normally distributed errors

- Identically distributed errors over the range (homogeneity of variance)

- No undue influence of points with high leverage

- An underlying linear relationship

- Independent errors

In this rather artificial example all the assumptions are met. However real ecological data is rarely as simple as this. In order to justify the use of a regression model you must be able to show that the assumptions are not seriously violated. We will come on to what “seriously”" means later.

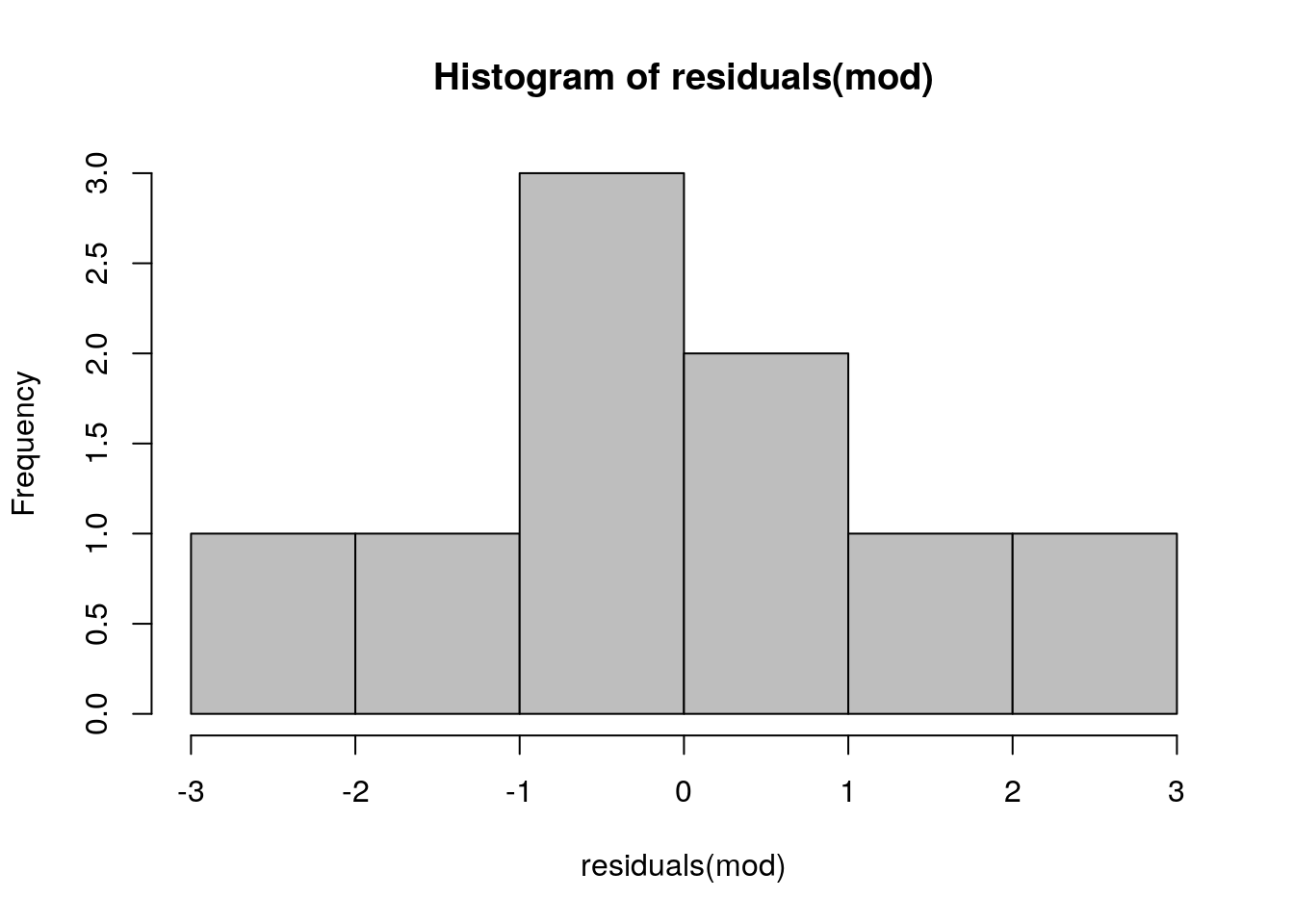

11.3.2.1 Normality

It is important to remember that the assumption of normality applies to the residuals after a model has been fitted. You do not test the for normality of the variable itself, as the relationship that you are modelling influences the distribution of the variable. You can look at the distribution of the residuals by plotting a histogram.

hist(residuals(mod),col="grey")

This looks good enough. A slightly more sophisticated way of spotting deviations from normality is to use a qqplot.

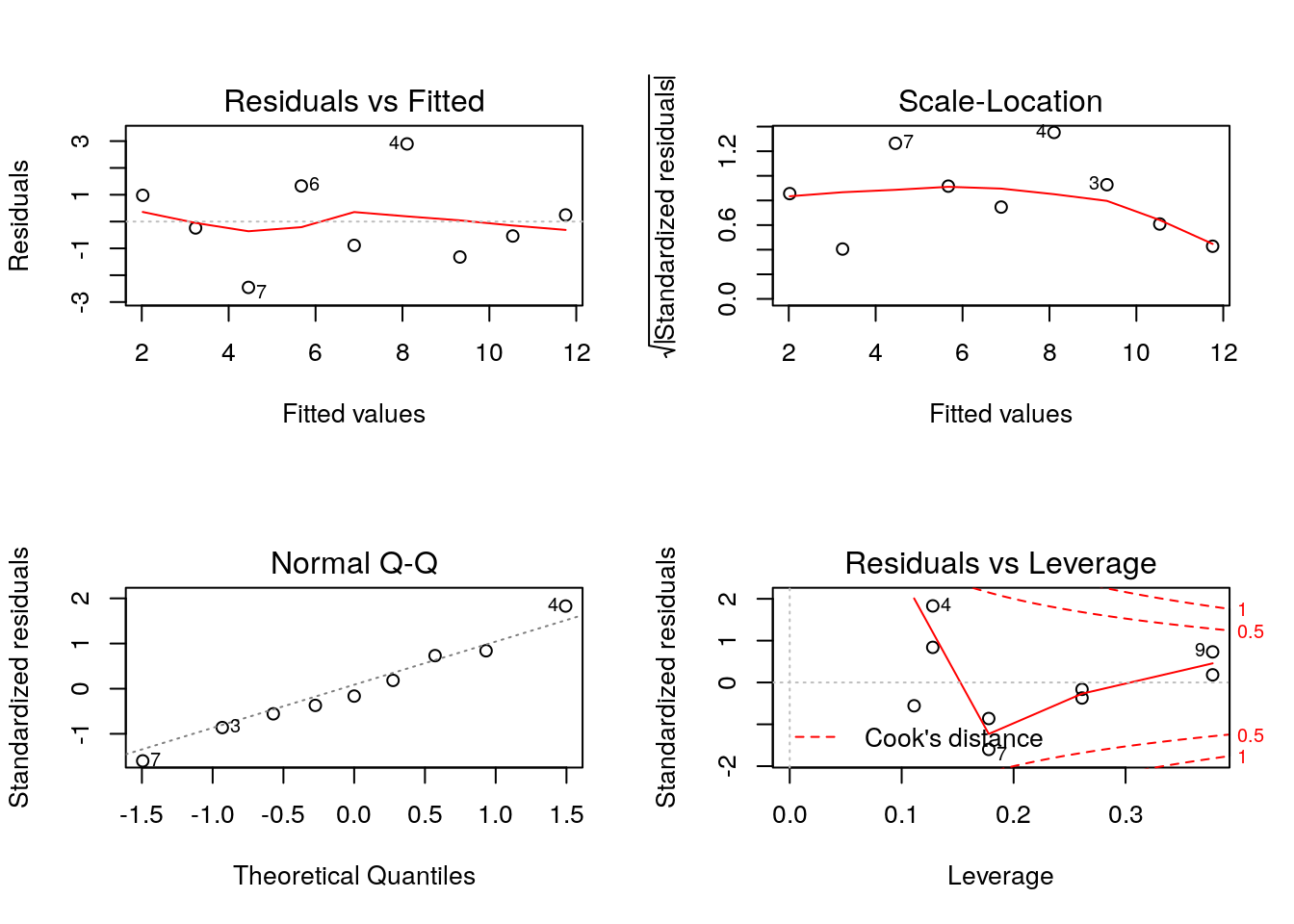

If we ask R to plot a fitted model, we actually get a set of diagnostic plots. There are six of these. By default R will produce four of them. The qqplot is the second in the series. It is used as a visual check of the normality assumption. If the assumption is met the points should fall approximately along the predicted straight line.

plot(mod,which=2)

Qqplots often have a sigmoid shape. This occurs when some of the extreme values fall further along the tail of the normal distribution than expected. Providing this effect is slight it is not a problem. However deviations from the line away from the extremes does show a major departure from the assumption. Interpretation of QQplots requires some experience, but they can be very useful.

If you have doubts about your ability to interpret the plot you could try using a statistical test of normality.

shapiro.test(residuals(mod))##

## Shapiro-Wilk normality test

##

## data: residuals(mod)

## W = 0.98794, p-value = 0.9926The problem with this test is that it becomes more sensitive as the number of observations increase. Thus you are more likely to get a p-value below 0.05 if you have a lot of data. However violations of normality become much less serious as the number of observations increase. So it can be safe to ignore a significant test result if the QQplot and histogram do not suggest major problems providing you have more than around 30 observations. If the histogram shows clear skew then you should think about a data transform, weighted regression or using a generalised linear model. More about this later.

11.3.2.2 Homogeneity of variance

The assumption here is that the distance a point is likely to fall from the fitted line does not change along the x values. This is very often violated. For example if the values represent counts of individuals it is constrained to not fall below zero and will tend to be some function of the expected value. This results in residuals that follow a poisson distribution or more likely, a negative binomial. The characteristic of both these distributions is that the scatter increases as the expected value increases.

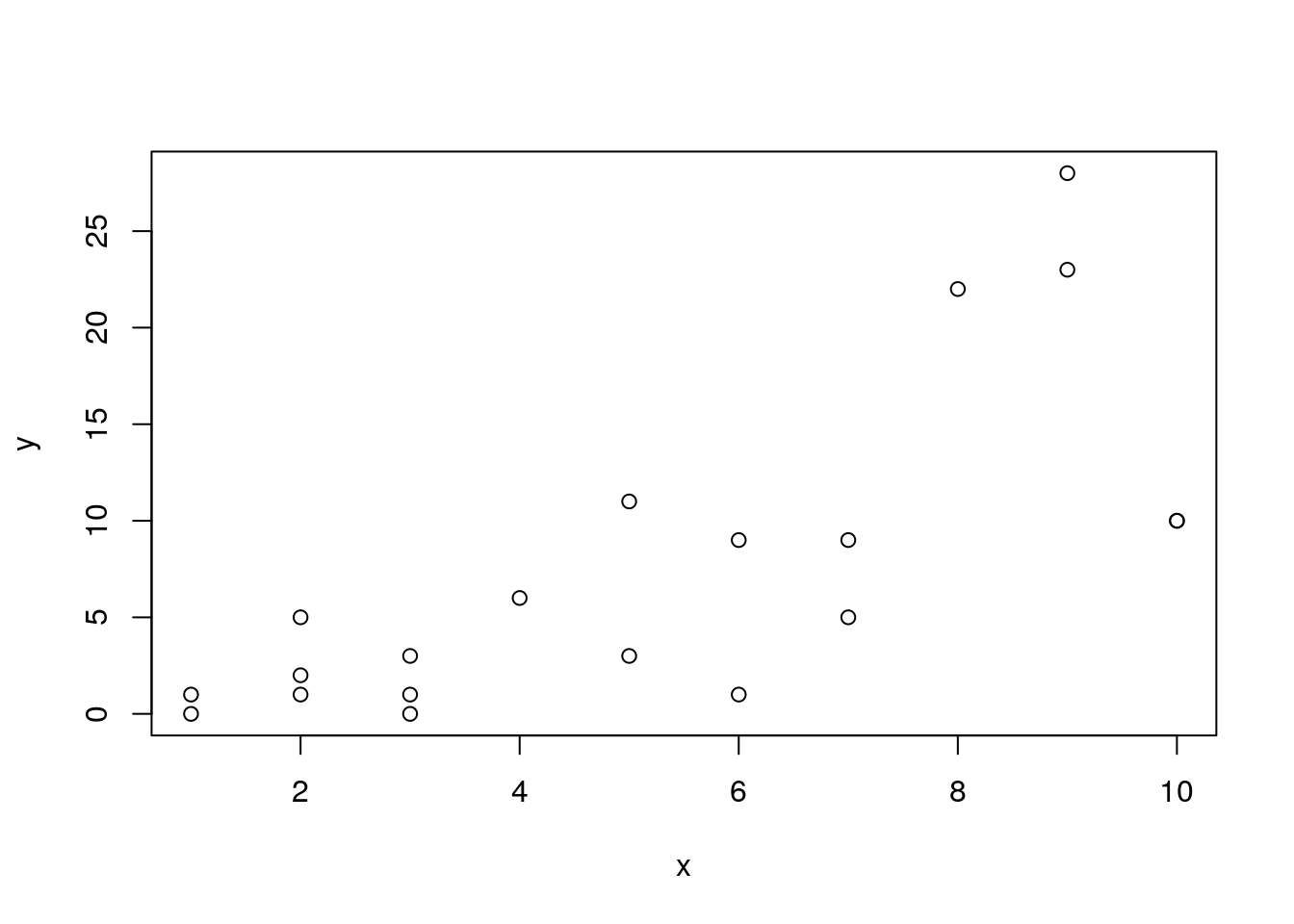

The following lines produce some data with this characteristic in order to illustrate the pattern.

set.seed(5)library(MASS)

x<-sample(0:10,20,rep=T)

y<-rnegbin(20,mu=x,theta=2)

plot(y~x)

mod.negbin<-lm(y~x)You should be able to spot a “fan” effect.

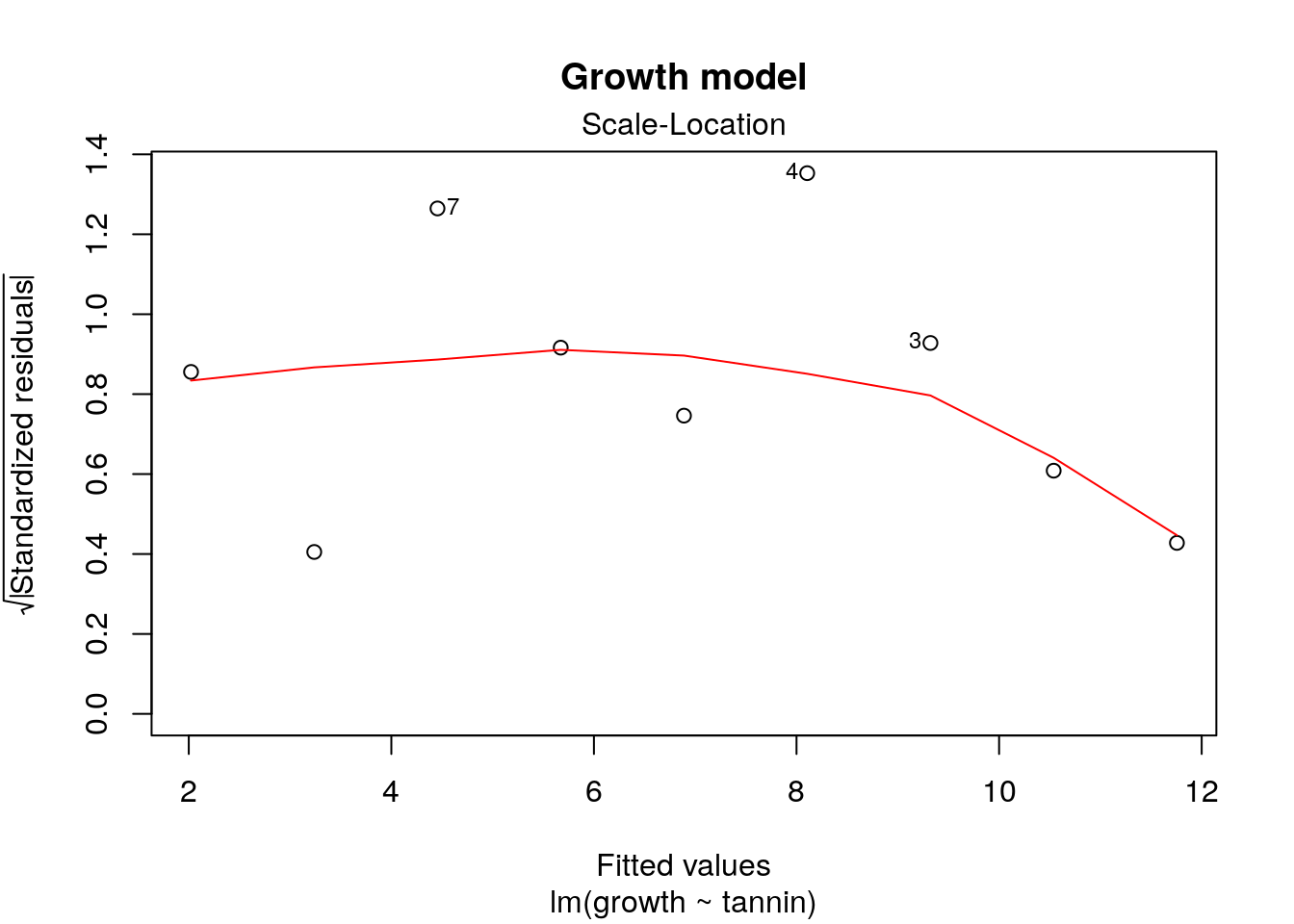

The third diagnostic plot in the series is used to see this effect more clearly. This plot shows the square root of the standardised residuals plotted against the fitted values. Because the square root is used all the values are positive. If there is an increasing (or decreasing) trend in the absolute size of the residuals it should show up on the plot.

plot(mod.negbin,which=3)

The red line is meant to guide the eye, but you should look carefully at the pattern rather than the line itself.

This is the pattern for the original model, that does not have a problem with heterogeneity of variance.

plot(mod,which=3,main="Growth model")

If you are having difficulty interpreting the results you could try a test for the significance of the trend using a correlation test between the variables shown on the diagonistic plot.

cor.test(sqrt(stdres(mod.negbin)),predict(mod.negbin))## Warning in sqrt(stdres(mod.negbin)): NaNs produced##

## Pearson's product-moment correlation

##

## data: sqrt(stdres(mod.negbin)) and predict(mod.negbin)

## t = 4.8093, df = 7, p-value = 0.001945

## alternative hypothesis: true correlation is not equal to 0

## 95 percent confidence interval:

## 0.5071470 0.9737071

## sample estimates:

## cor

## 0.8761687cor.test(sqrt(stdres(mod)),predict(mod))## Warning in sqrt(stdres(mod)): NaNs produced##

## Pearson's product-moment correlation

##

## data: sqrt(stdres(mod)) and predict(mod)

## t = -0.50019, df = 2, p-value = 0.6666

## alternative hypothesis: true correlation is not equal to 0

## 95 percent confidence interval:

## -0.9803574 0.9236405

## sample estimates:

## cor

## -0.3334484The same sort of caveats apply to the literal interpretation of this test as a decision rule as the normality test. Although most violations of homogeneity of variance have to be taken seriously, large data sets may show statistically significant, but inconsequential violations.

11.3.2.3 Leverage

In the artificial example provided by Crawley all the points are equally spaced along the x axis. This is the “classic” form for a regression and prevents problems with leverage. However in ecology we often have to analyse data that does not have this property. Some of the x values on a scatterplot may fall a long way from the centre of the distribution. Such points potentially could have an excessive influence on the form of the fitted model.

The influence a point has on the regression is a function of leverage and distance from a line fitted using all the other points.

alt text

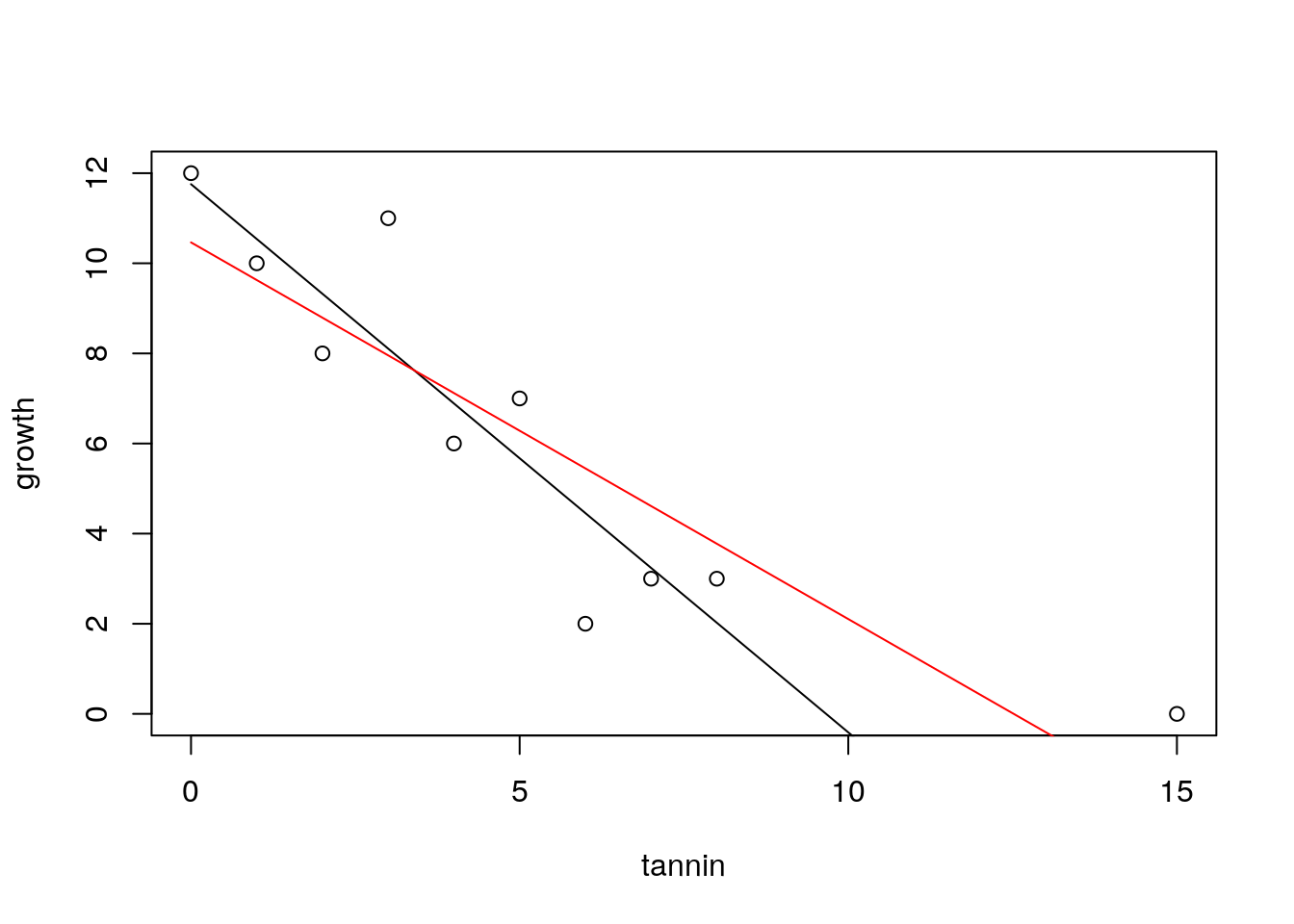

To illustrate this let’s add a point to the data at a high tannin density and assume that zero growth was measured at this value. How much does this new point influence the model?

tannin<-c(tannin,15)

growth<-c(growth,0)We can plot the new data and show the effect of this one point on the regression line. It is shown in red.

new.mod<-lm(growth~tannin)

plot(growth~tannin)

lines(tannin,predict(mod,list(tannin)))

lines(tannin,predict(new.mod,list(tannin)),col=2)

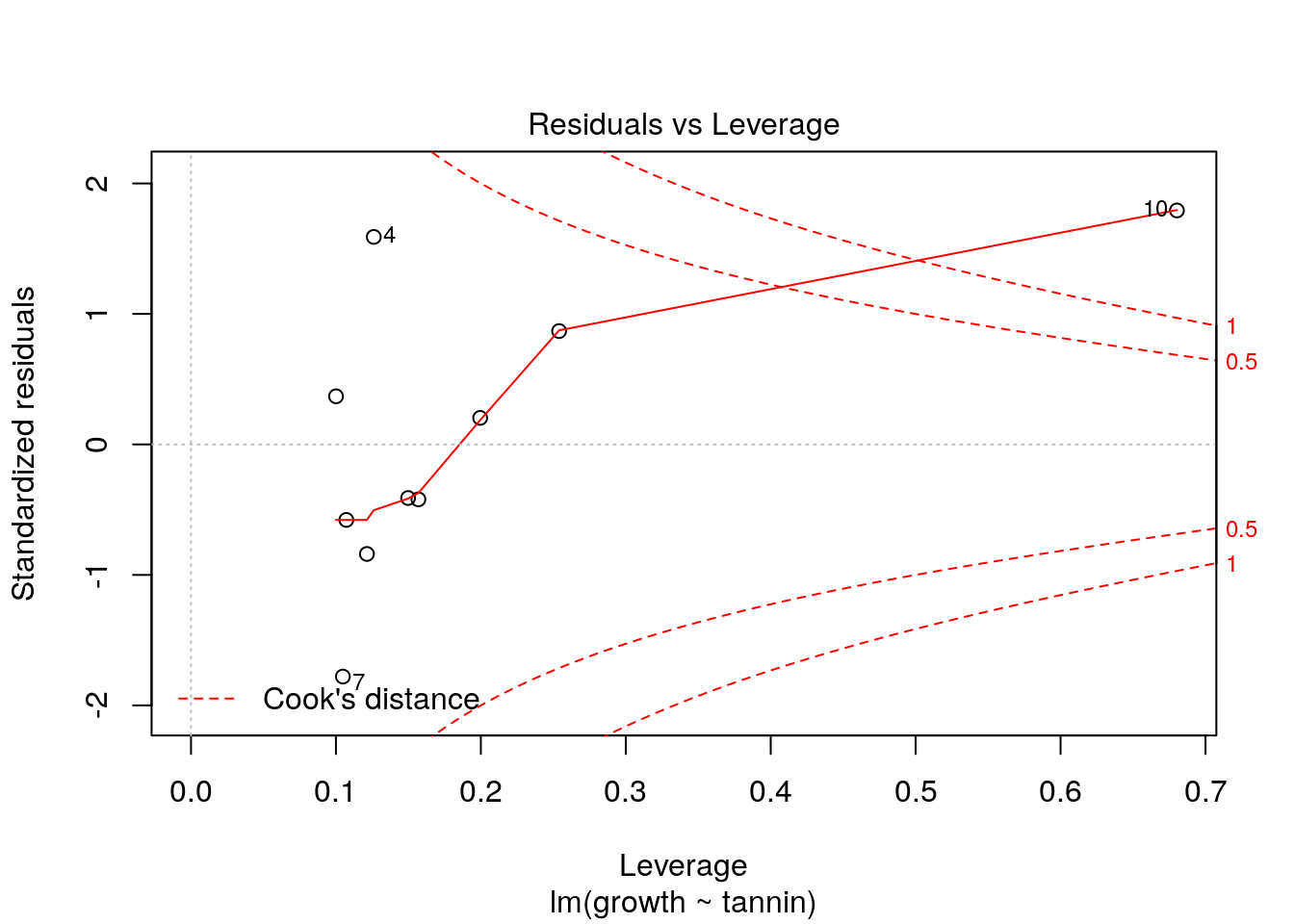

This data point has shifted the regression quite a lot due to a combination of its distance from the others (which gives it high leverage) and the fact that it lies a long way from the fitted line (high residual deviation). These two effects are captured by a quantity known as Cook’s distance.

The diagnostic plot shows leverage on the x axis and standardised residuals on the y axis. Large residuals with low leverage do not affect the model, so the safe area with low values for Cook’s distance (below 1) forms a funnel shape. Points falling outside this “safe area” can be flagged up.

There are no problem points in the original model.

plot(mod,which=5)

However the point that we added with both a high leverage and a high deviation from the original model shows up clearly when we diagnose the model with this point included.

plot(new.mod,which=5)

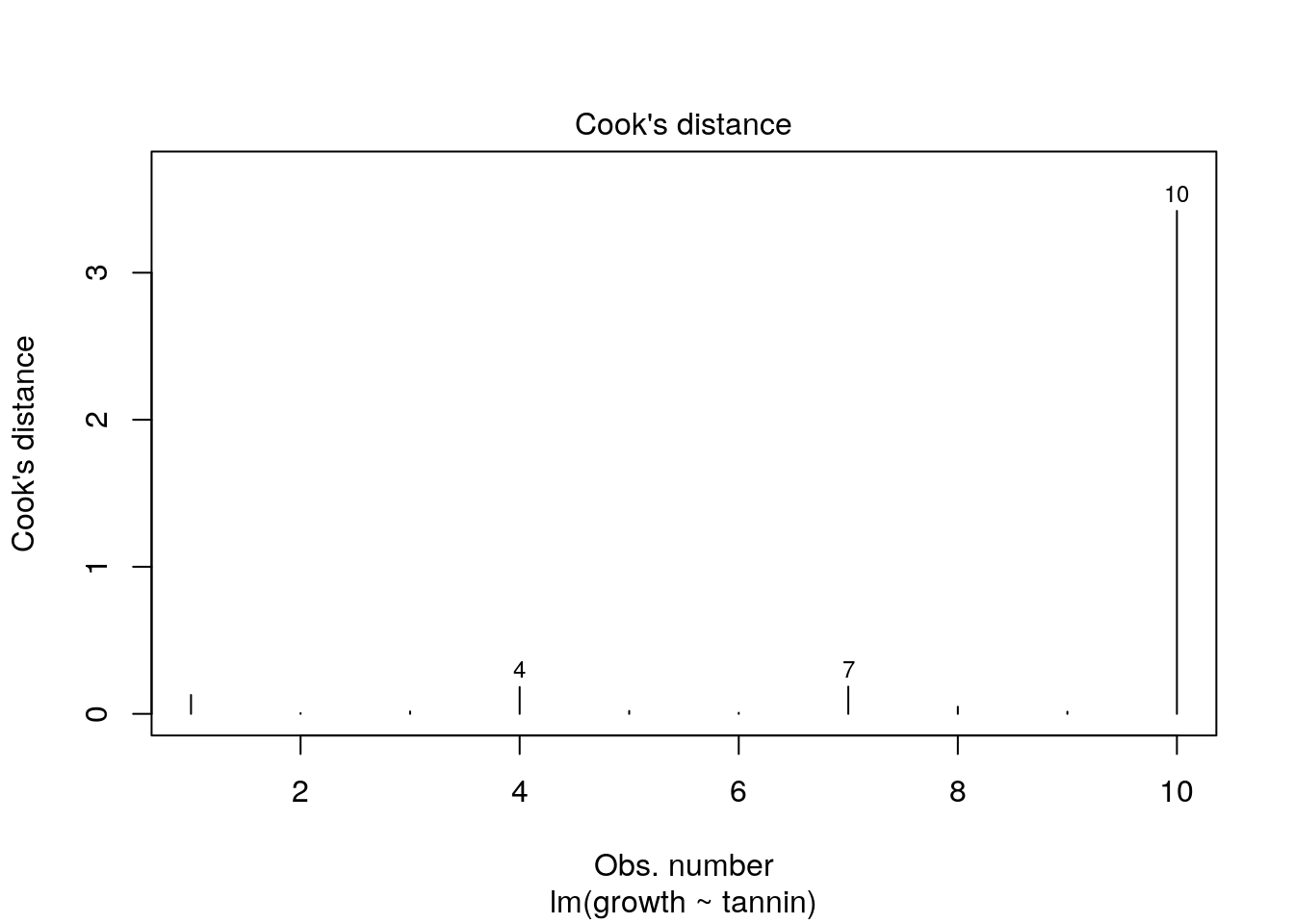

Another way of spotting this influential points is by looking at Cook’s distance directly. Values over 1 are typically considered to be extreme.

plot(new.mod,which=4)

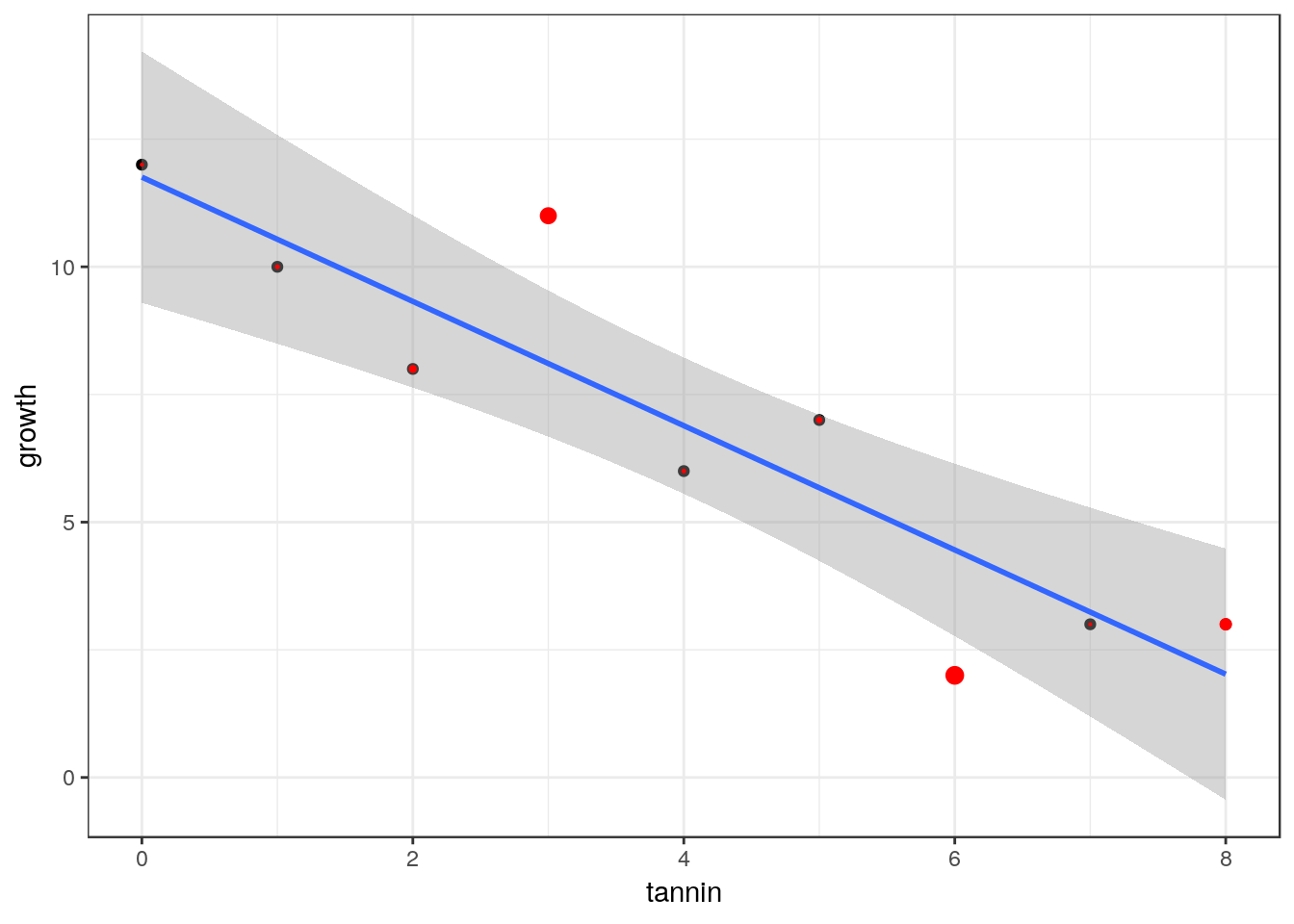

larvae$cooks<-cooks.distance(mod)

g0<-ggplot(data=larvae, aes(x=tannin,y=growth))

g1<-g0+geom_point()

g1<-g1+geom_smooth(method="lm")

g1+geom_point(size=larvae$cooks*10,col="red")

11.3.3 Lack of independence

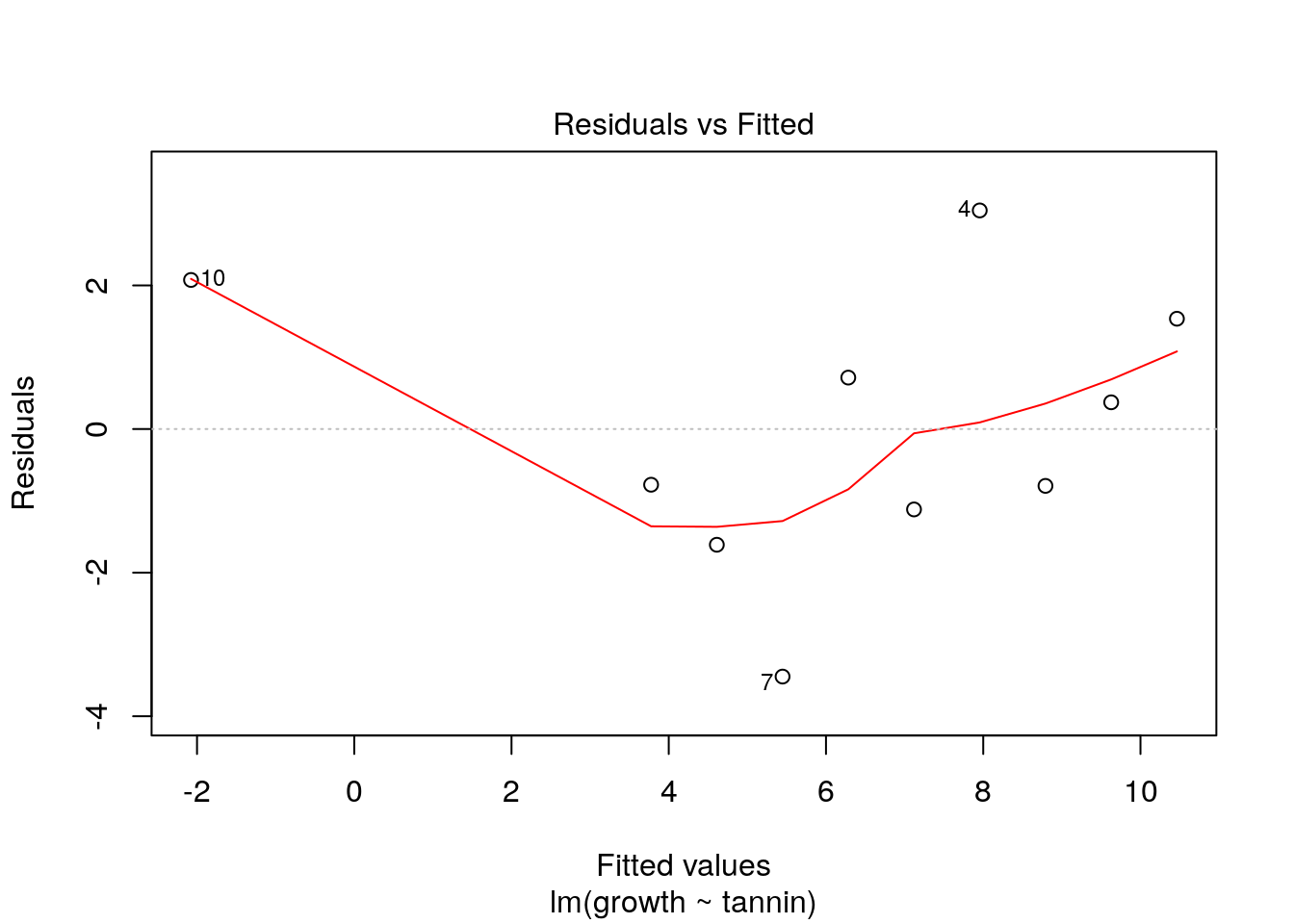

The value and sign of the residual deviation should not be related in any way to that of the previous point. If residual values form “clusters” on one side of the line or another it is a sign of lack of independence. There are two causes of this. The most serious is intrinsic temporal or spatial autocorrelation. This is discussed in the next section. A less serious matter is that the shape of the model is not appropriate for the data. We can see this in the last example. While the strait line might have been OK for the range of data originally given by Crawley, a straight line is a poor model when an additional data point is added at a high tannin level. At some point tannin stops all growth, so the underlying model must be asymptotic. Thus the model with the extra point has correlation in the raw residuals. High tannin values have positive residual deviation as a result of the poor fit of the model. This can be seen by using the first diagnostic plot that R produces.

plot(new.mod, which=1)

A common way of testing for lack of independence is the Durbin Watson test for serial autocorrelation.

library(lmtest)## Loading required package: zoo##

## Attaching package: 'zoo'## The following objects are masked from 'package:base':

##

## as.Date, as.Date.numericdwtest(growth~tannin)##

## Durbin-Watson test

##

## data: growth ~ tannin

## DW = 2.0168, p-value = 0.3679

## alternative hypothesis: true autocorrelation is greater than 011.3.3.1 Putting it all together

If you just ask R to plot a model you get all four plots. With some practice these should help you spot problems that need attention. Notice that to get four plots on one page you use par(mfcol=c(2,2)).

par(mfcol=c(2,2))

plot(mod)

11.4 Where do the sum of squares come from?

Contemporary approaches to analysis of variance and regression place the two techniques together under the heading of General Linear Models.This is the approach used in R. In both cases the formula that is dropped in to the call to fit a linear model is similar. The difference is that one way ANOVA uses a factor to predict fitted values and calculate residuals whereas regression uses a numerical variable.

In order to demonstrate the fundamental similarity between the two models we will set up some simulated data and then calculate the sum of squares from first principles.

11.4.0.1 ANOVA

Let’s make up a predictor variable. This is a factor with three levels, call them A, B and C. Now, let’s assume that the fitted values (in other words thede terministic pattern of response to the three levels of this factor) are mean values of 10, 15 and 20. These are the expected responses if there were no variability apart from that between groups.

set.seed(1)

predictor<-as.factor(rep(c("A","B","C"),each=10))

fitted<-rep(c(10,12,20),each=10)Of course, there is going to be variability within each group. So let’s add this in. We will assume that the variability can be modelled as a normal distribution with mean zero and standard deviation of 5.

So the results we actually get are derived by adding these two components together.

residuals<-rnorm(30,mean=0,sd=5)

results<-fitted+residuals

d<-data.frame(predictor,results)

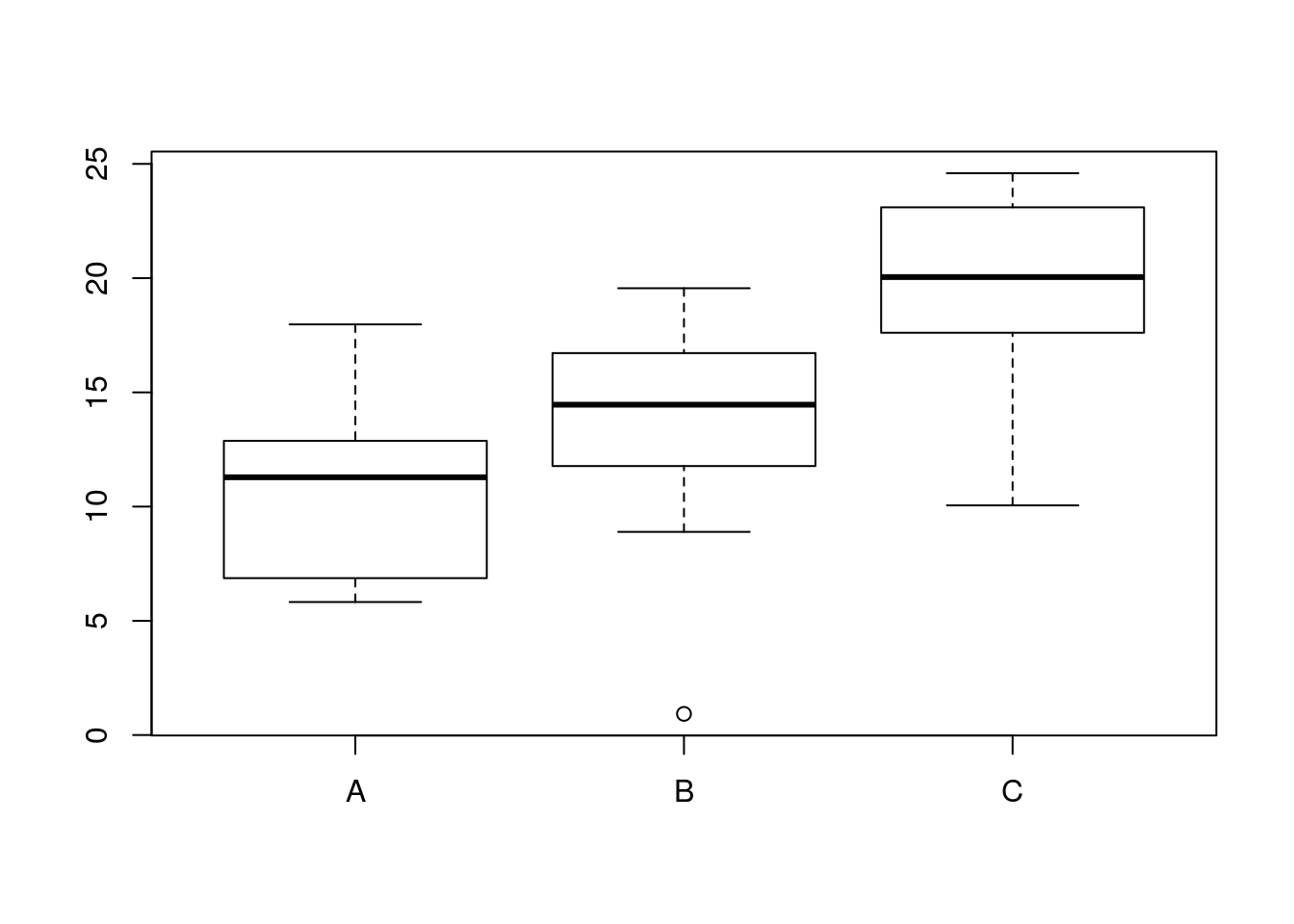

boxplot(results~predictor,data=d)

Of course, because this was a simulation experiment the actual means will be slightly different to the invested values. We can set up a data frame with true fitted and residual values like this.

mod<-lm(results~predictor,data=d)

d<-data.frame(predictor,results,fitted=fitted(mod),residuals=residuals(mod))

head(d)## predictor results fitted residuals

## 1 A 6.867731 10.66101 -3.7932830

## 2 A 10.918217 10.66101 0.2572027

## 3 A 5.821857 10.66101 -4.8391570

## 4 A 17.976404 10.66101 7.3153901

## 5 A 11.647539 10.66101 0.9865250

## 6 A 5.897658 10.66101 -4.763355811.4.0.2 The numerator sum of squares

OK, so where do the numbers in the Anova table come from? The first step is to understand that the numerator sum of squares represents all the deterministic variability in the system. This is the variability attributable to differences between groups. The sum of squares is the sum of the squared deviations around the mean. But, which mean? In this case it is the overall mean value for the respnse.

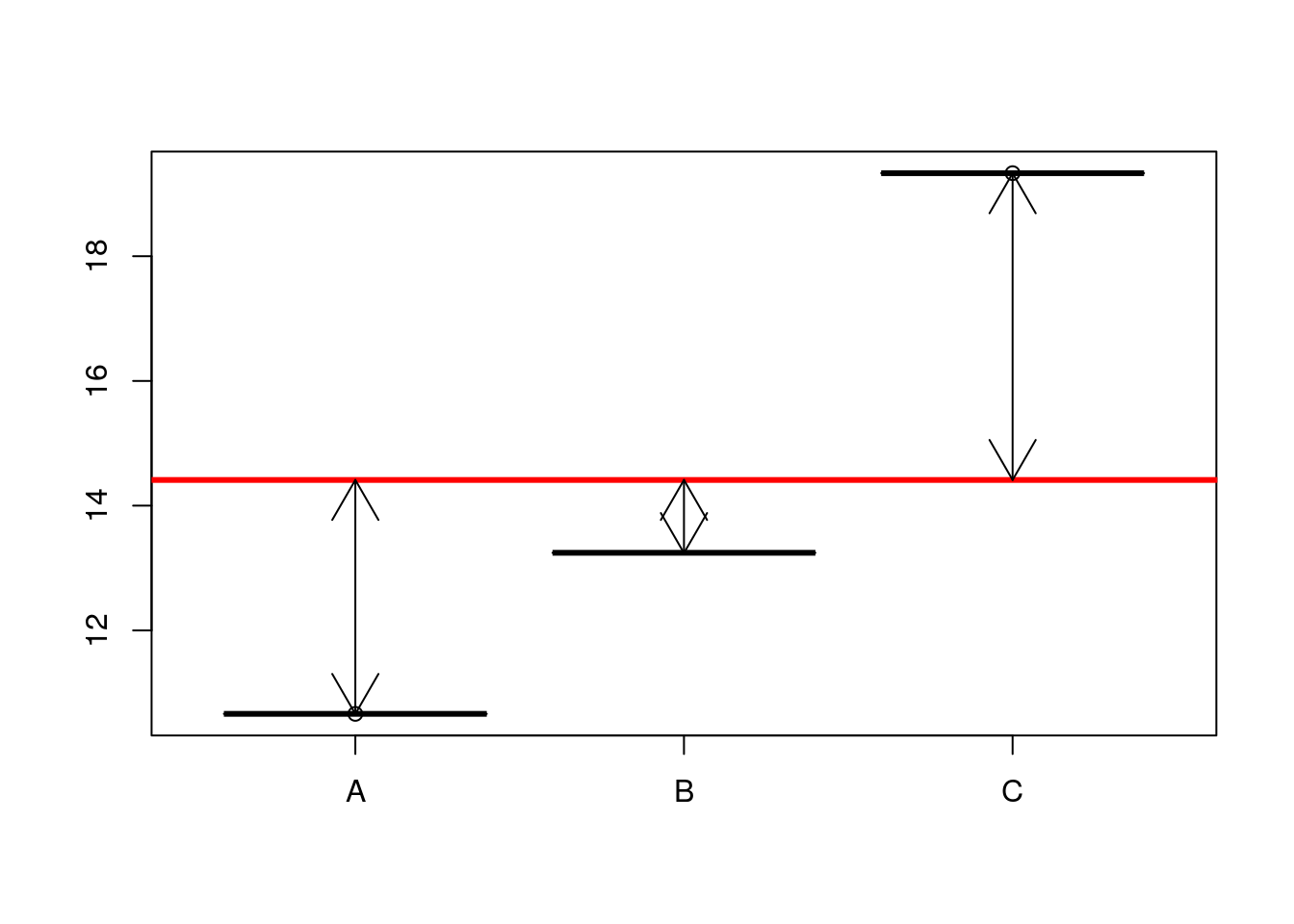

So, look at the boxplot again, but this time remove the variability and just plot the means. We can show the difference for each mean from the grand mean using arrows.

boxplot(fitted~predictor,data=d)

abline(h=mean(results),lwd=3,col=2)

arrows(1,10.66,1,mean(results),code=3)

arrows(2,13.24,2,mean(results),code=3)

arrows(3,19.33,3,mean(results),code=3)

OK, so for each mean there are 10 fitted values. The sum of squares is simply

nsqrs<-(d$fitted-mean(results))^2

nsumsqrs<-sum(nsqrs)11.4.0.3 Denominator sum of squares

The denominator sum of squares in an Anova table represents all the variability that can be attributed to the stochastic component of the model. In other words, the residual variablity. We produced that when simulating the data. The values are simply the residuals once the means for each group have been subtracted.

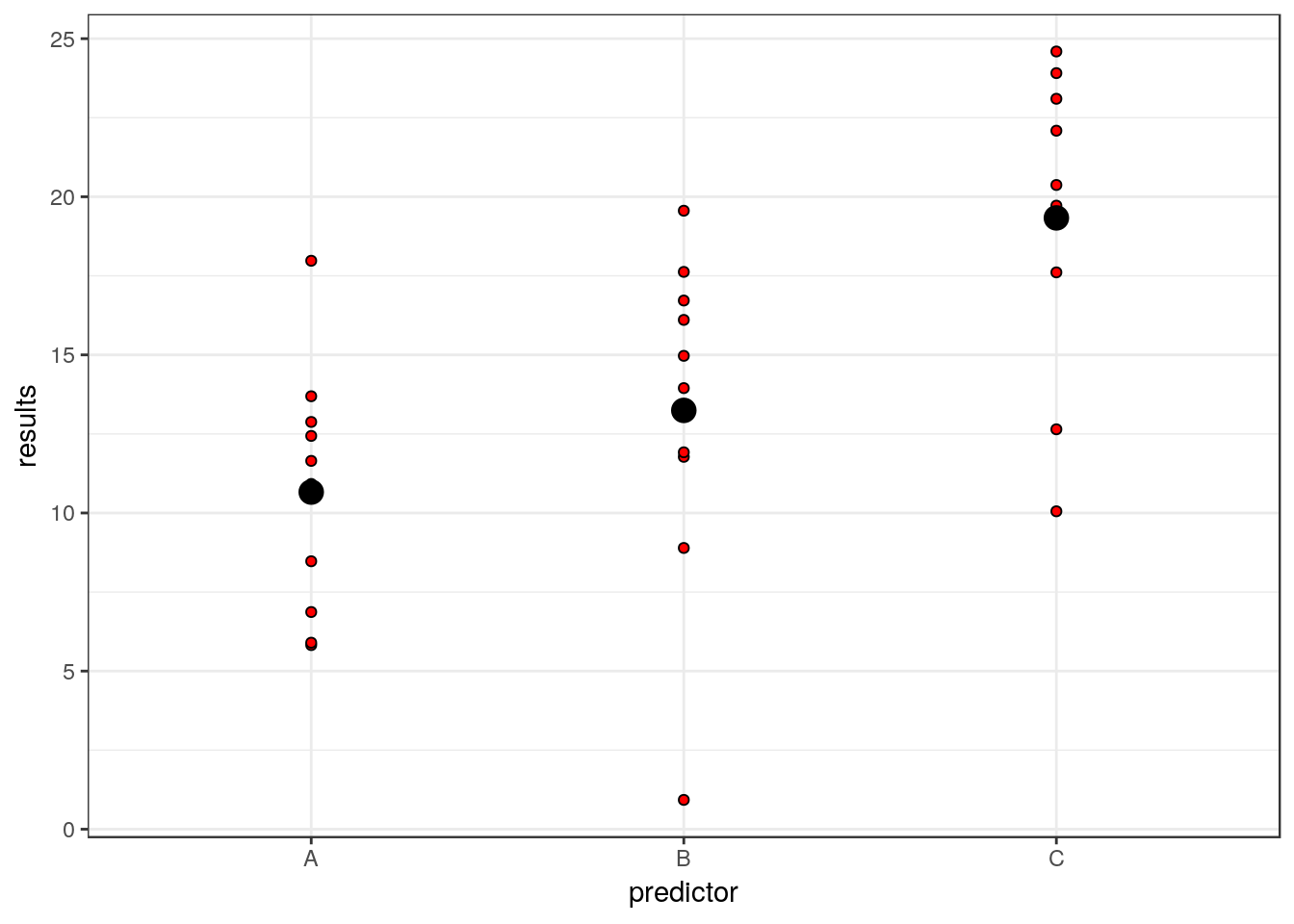

g0<-ggplot(d,aes(x=predictor,y=results))

g0+geom_point(pch=21,bg=2)+stat_summary(fun.y=mean,geom="point",size=4)

So each residual is the distance between the red points and the large black point representing the mean for that group.

dsqrs<-d$residuals^2

dsumsqrs<-sum(dsqrs)mod<-lm(results~predictor)

anova(mod)## Analysis of Variance Table

##

## Response: results

## Df Sum Sq Mean Sq F value Pr(>F)

## predictor 2 396.36 198.18 8.9192 0.001062 **

## Residuals 27 599.93 22.22

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1nsumsqrs## [1] 396.3639dsumsqrs## [1] 599.9315The degrees of freedom for the munerator sum of squares are the number of free parameters in the model. This is one less than the number of groups because we need to calculate the grand mean from the data, and thus use up degree of freedom. In order to calculate the residuals we need to calculate three means in this case, so the denominator degree of freedom is the total sample size minus three.

11.4.1 Regression

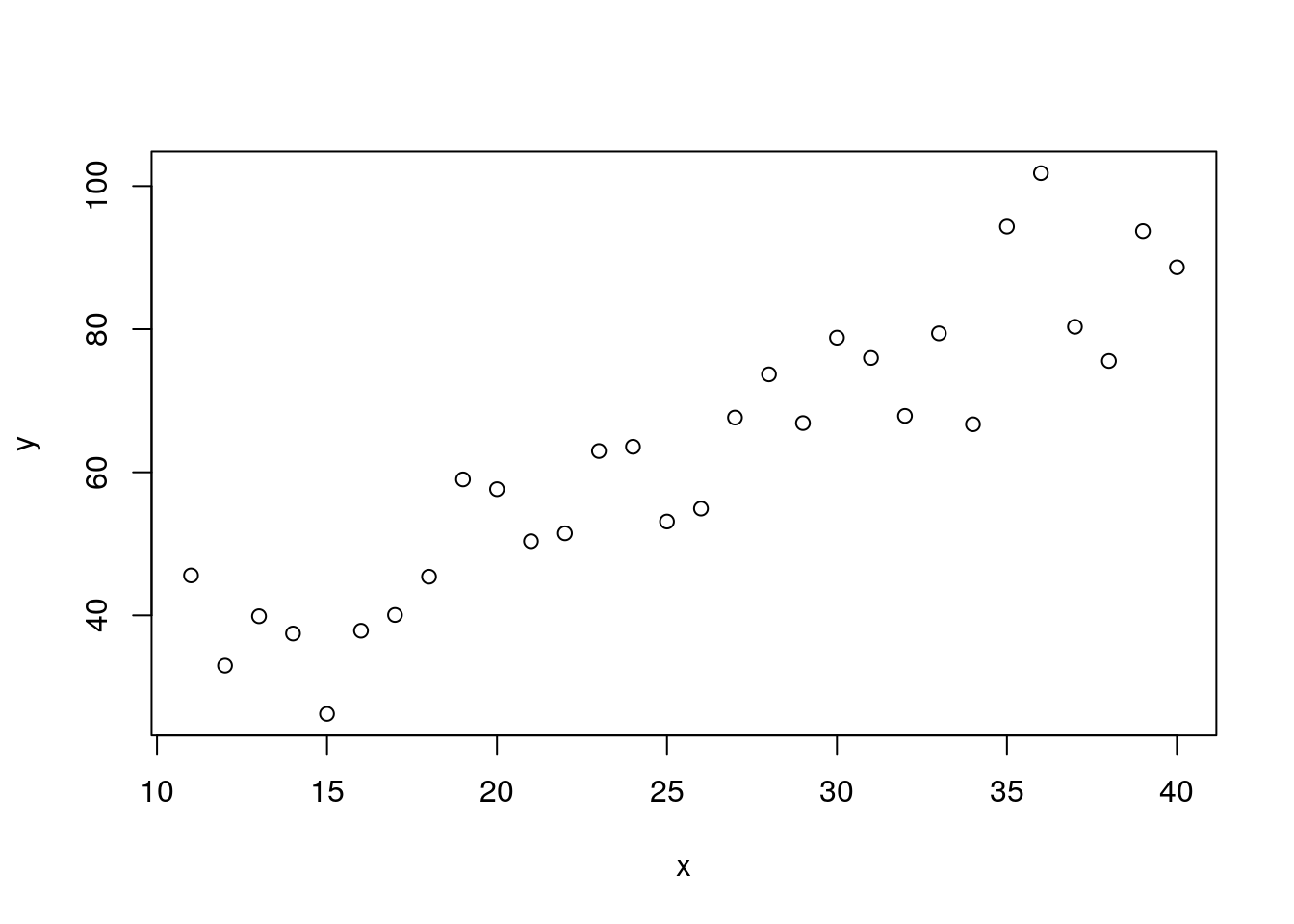

The set up for regression is in effect identical, apart from the fact that the fitted values are continuous rather than group means. So if we invent some data

x<-11:40

y<-10+x*2+rnorm(30,0,10)

plot(y~x)

mod<-lm(y~x)

d<-data.frame(x,y,fitted=fitted(mod),residuals=residuals(mod))

head(d)## x y fitted residuals

## 1 11 45.58680 32.84861 12.738183

## 2 12 32.97212 34.88166 -1.909533

## 3 13 39.87672 36.91470 2.962016

## 4 14 37.46195 38.94774 -1.485794

## 5 15 26.22940 40.98079 -14.751383

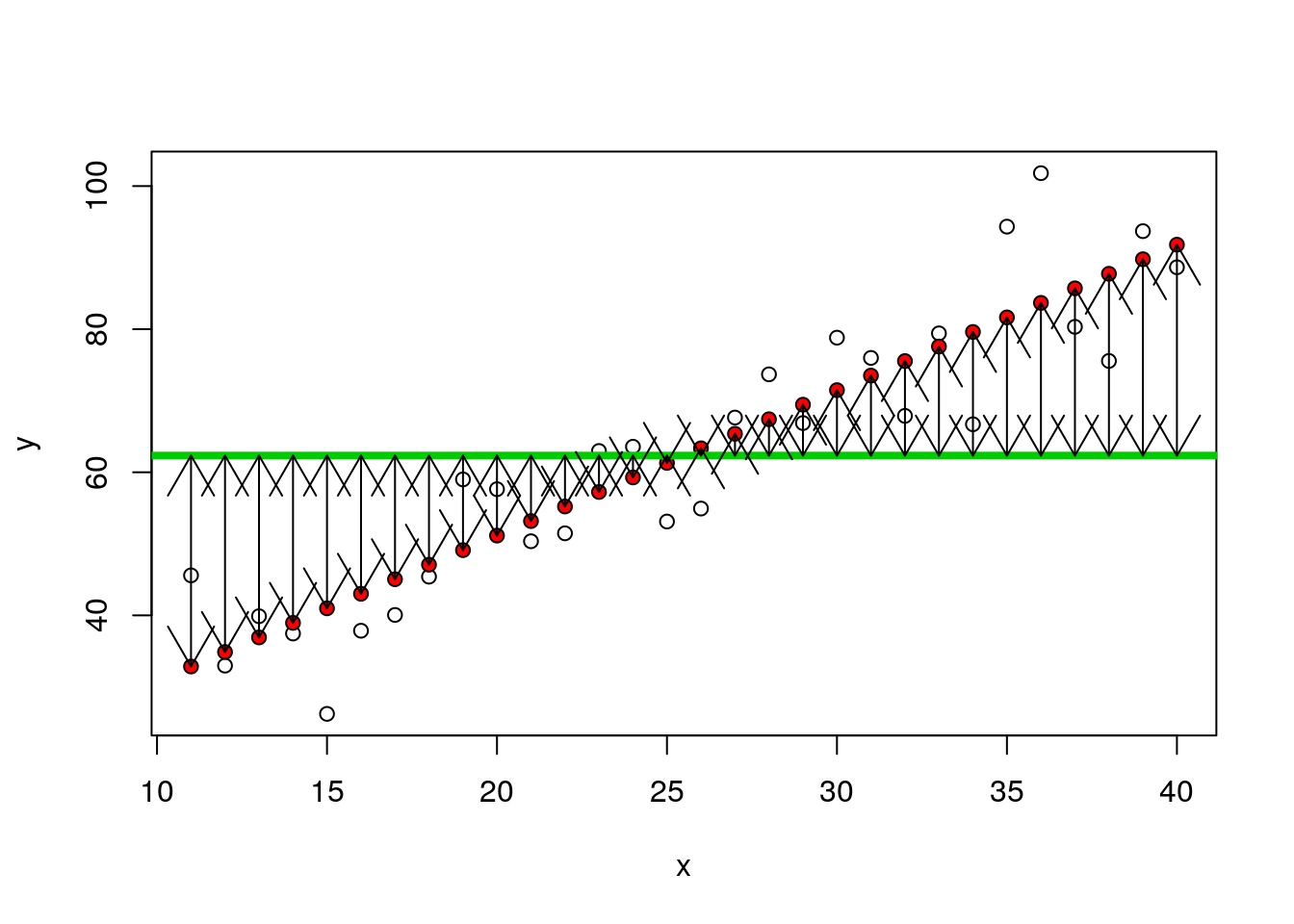

## 6 16 37.85005 43.01383 -5.163776plot(y~x)

points(x,fitted(mod),pch=21,bg=2)

abline(h=mean(y),lwd=4,col=3)

arrows(x,fitted(mod),x,mean(y),code=3)

nsqrs<-(d$fitted-mean(y))^2

nsumsqrs<-sum(nsqrs)11.4.2 Residuals

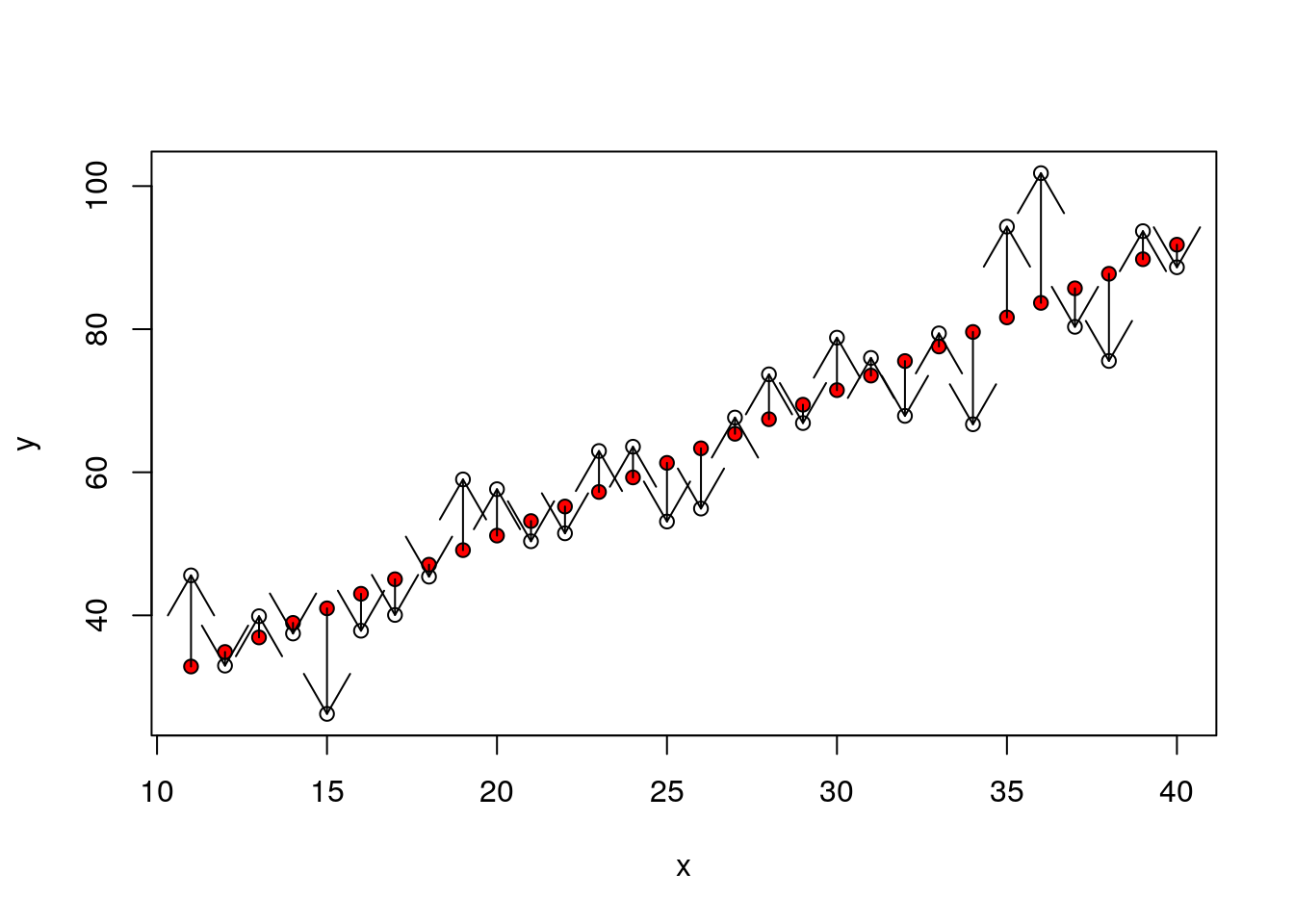

plot(y~x)

points(x,fitted(mod),pch=21,bg=2)

arrows(x,fitted(mod),x,y,code=2)

dsqrs<-d$residuals^2

dsumsqrs<-sum(dsqrs)mod<-lm(y~x)

anova(mod)## Analysis of Variance Table

##

## Response: y

## Df Sum Sq Mean Sq F value Pr(>F)

## x 1 9289.5 9289.5 141.99 1.759e-12 ***

## Residuals 28 1831.9 65.4

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1nsumsqrs## [1] 9289.517dsumsqrs## [1] 1831.90211.5 Where does R squared (coefficient of determination) come from?

The “explained variability” in the data is often reported using R squared. High values suggest that a large proportion of the variability is “explained” by the model, whether the model is a regression or an ANOVA. Where does that fit in? Well the total sum of squares is the sum of all the squared distances that we have calculated. If we divide the sum of squares attributable to the model by the total we get the proportion of the variability attributable to the model.

totsumsqrs<-nsumsqrs+dsumsqrs

nsumsqrs/totsumsqrs## [1] 0.8352817We can see that this is the same as R provides by asking for a summary.

summary(mod)##

## Call:

## lm(formula = y ~ x)

##

## Residuals:

## Min 1Q Median 3Q Max

## -14.751 -5.120 -1.579 5.365 18.129

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 10.4851 4.5945 2.282 0.0303 *

## x 2.0330 0.1706 11.916 1.76e-12 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 8.089 on 28 degrees of freedom

## Multiple R-squared: 0.8353, Adjusted R-squared: 0.8294

## F-statistic: 142 on 1 and 28 DF, p-value: 1.759e-1211.5.1 Violations of assumptions

What do you do if your diagnostic analysis shows that there is a problem with the model? The first thing to realise is that all models are approximations of reality. As the statistician G.E. Box famously stated “All models are wrong … but some are useful.” If journal editors and referees only admitted papers which used the “correct” model ecological publishing would stop in its tracks. The important point to be aware of is that not all violations of the assumptions are equally serious. Almost all of them can be handled by more advanced modelling, but sometimes it is simply enough to point out that a minor violation was noticed, but it was not considered serious enough to prevent further analysis, together with a clearly stated justification.

11.5.1.1 Normality of the residuals

This is considered to be the least important assumption of the model. Slight deviations from normality will not usually affect the conclusions drawn. Furthermore some influential authors, eg Sokal and Rolf, state that the central limit theorem means that the assumption can safely be ignored for large (n>30) samples. However, be careful with this, as the assumption is that the residuals are non-normal, but homogeneous. This is unlikely to occcur.

11.5.1.2 Fixed values of the independent variable

This assumption is in fact nearly always violated in ecological studies. We never measure anything without error. The main point to be aware of is that it is error relative to the range that causes an issue. So, if we measure a variable such as tree height with an accuracy of 10cm and the range of values falls between 5m and 20m there is no problem. However if the range of heights were to be only between 10m and 11m an issue may arise. You should always aim to have a large range of values for the explanatory variable with respect to measurement errors.

11.5.1.3 Homogeneity of variance

Violations of this assumption can be quite serious. However you should be aware that fixing the problem using techniques such as weighted regression will not affect the slope of the regression line. It will affect the p-values (making them larger) and confidence intervals (making them wider). If the p-value from the uncorrected model is very small (p<0.001) then a correction for heterogeneity of variance is unlikely to result in a loss of significance. If the p-value is close to the conventional cut off (p<0.05) then the correction will almost certainly change your conclusions. In some cases the apparent heterogenity is the result of outliers that need removal. Doing this will of course change the slope.

11.5.1.4 Incorrect model form

A regression is a straight line. In many ecological situations the true responses take the form of a curve of some kind. Asymptotes are very commonly predicted both from theory and “common sense”. For example, adding more nitrogen fertilizer beyond a certain point will not produce any more growth. The biggest problem for regression in these circumstances is not that the model does not fit. It may approximate quite well to one part of the curve. The issue is that the functional form is misleading and does not represent the underlying process.

11.5.1.5 Influential outliers

We have seen that an outlier does not necessarily influence the regression unless it also has high leverage. You have to think carefully before simply removing these points. If points at the extreme ends of the x axis have high residual values it may be that the model is badly formed. We have seen the potential for this in the example above. In this case you may want to restrict a linear regression to the linear part of the relationship and remove data at the ends. Flexible models such as GAMs which we will see later can deal with the issue. You may also use a data transformation to pull the extreme values in. It may well turn out that the issue simply is due to mistaken measurements, in which case the extreme values are rejected after checking. There are also other methods to formally handle the problem such as robust regression.

11.5.1.6 Independence of the errors

This is the big issue. Violations of this assumption are always serious. The main problem is that if observations are not independent the model is claiming many more degrees of freedom (replication) than is justified. This means that the model cannot be generalised. The issue affects many, if not all, ecological studies to some extent. Measurements are rarely truly independent from each other in space and time. The issue affects the interpetation of the p-value and confidence intervals of the model. While the model may still be suitable for prediction within its sample space it will not generalise beyond it. In the next class we will look at an example in detail that may help to clarify this.